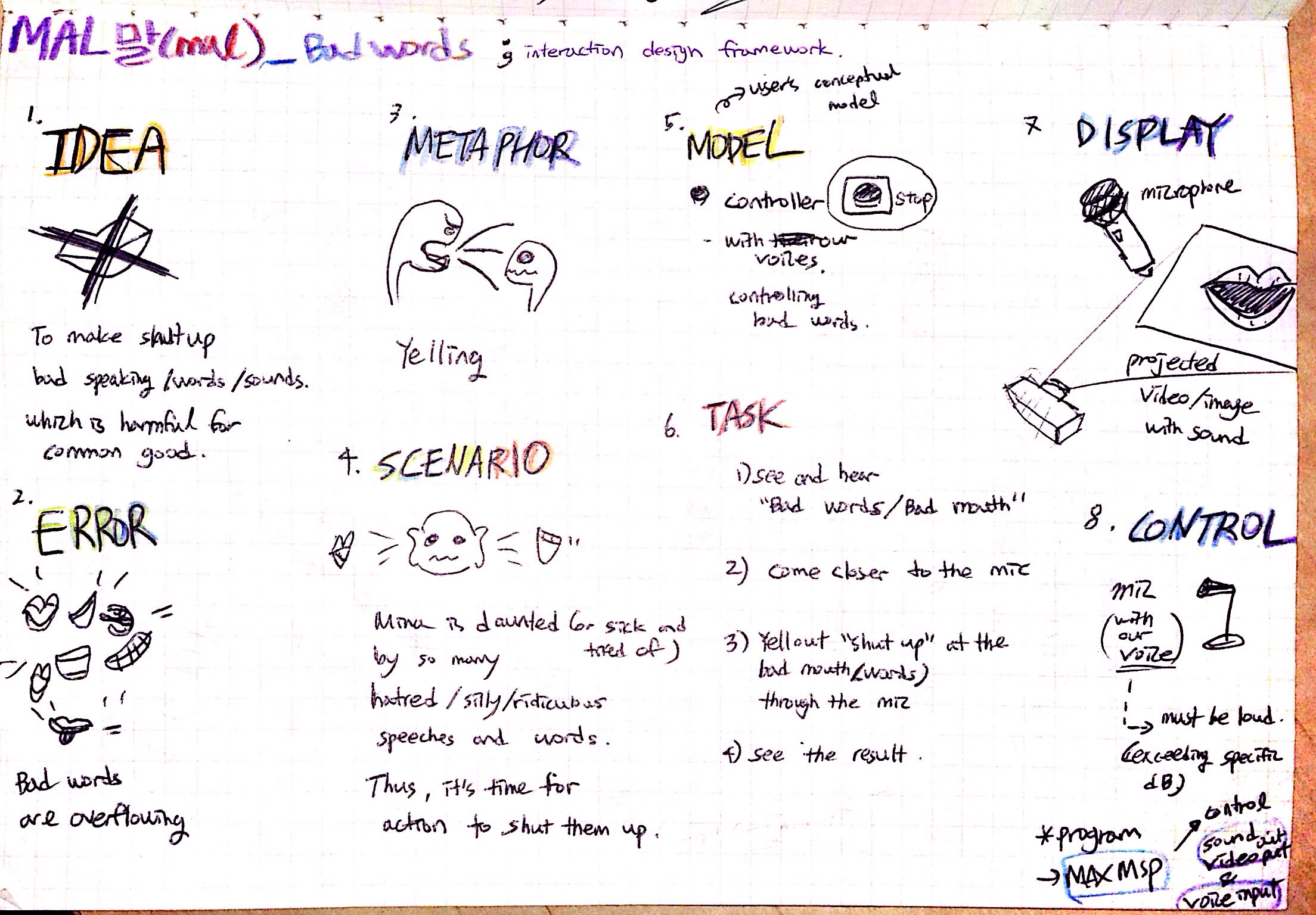

MALMAL, work in progress, 2017

MALMAL means ‘bad word’, combining the french adjective ‘mal’(bad) with the same sounds word in Korean ‘말mal’(word or language).

I had worked for this idea before by making iOS application, but now I keep working with this concept for another expression using another materials.

So the basic ideation is illustrated on the framework above, but still finding and thinking of more effective way of interaction and delivery of intention.

Until now I’ve working with MaxMSP to trigger sounds and videos, and tested a projection mapping with videos I made.

These are my to-do-list

- Devise more intuitive interaction of artwork with audience(participant)

- Design sounds and videos(more or newly)

- Figure out proper tools : still gonna use max? if so, for which interaction?

- The way of presenting

Research for related (art)works

There are several works which has similar subject as well as works that has really intuitive and effective interaction for delivering its meaning.

-

Good reference for intuitive interaction

- Little Boxes, Bego M. Santiago, 2011

-

Toy button with similar idea(yelling to make shut up, funny way of delivery)

- Shut up Button

- Donald Trump Button, silly remarks of Donald Trump

- Another Trump Button

-

Hacktivism (art)works with similar idea

- Burned Your Tweet, (physically) burning trump’s inflammatory tweets in real time

- Demagogiaprotektor, Daniel Cseh : 3d printed middle finger skeleton which is responsive to (political bullshit)remarks, powered by servomotor and Google’s speech recognition capabilities

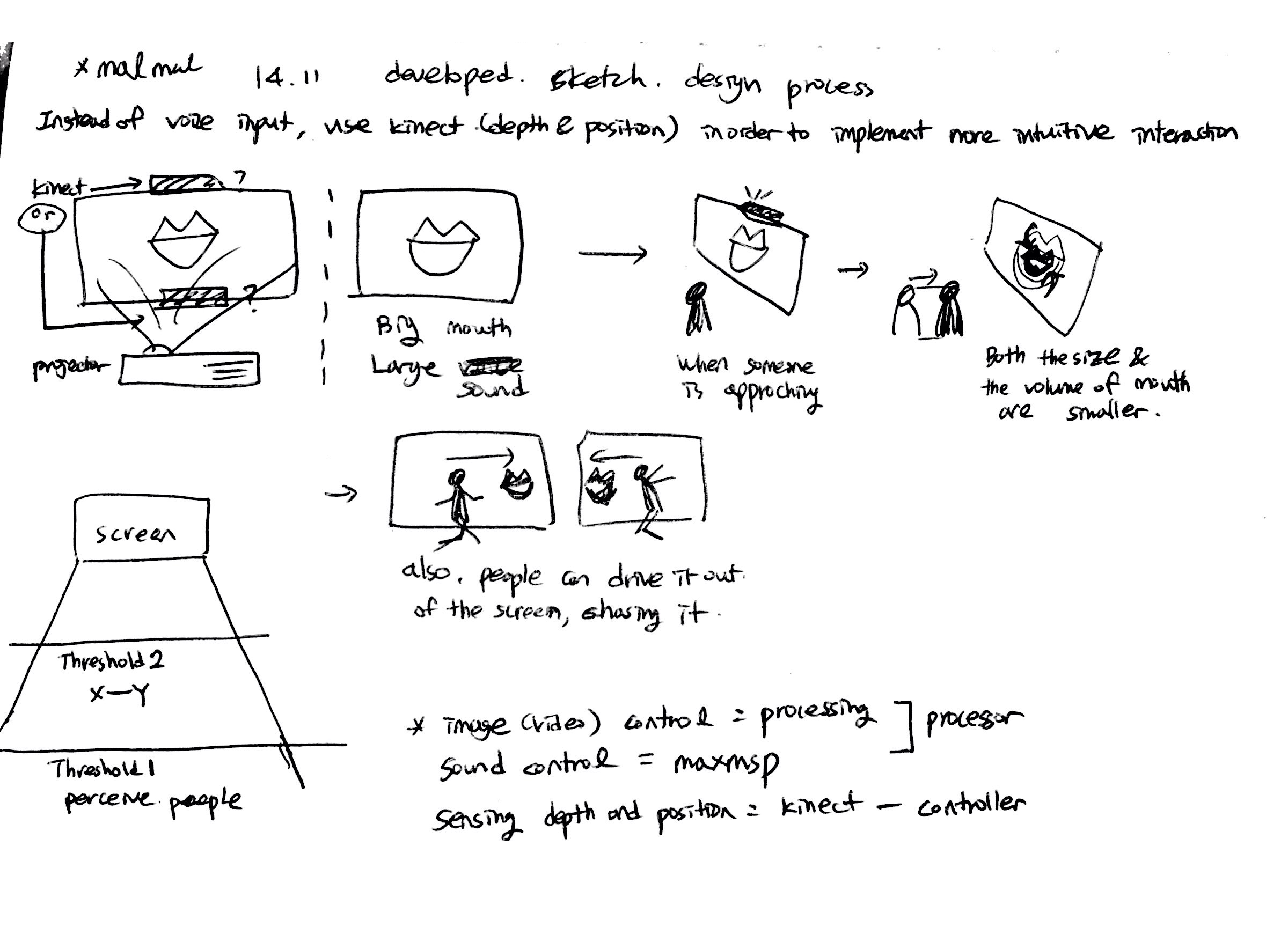

Developed sketch of the design & process, 14.Nov

Instead of the voice input with MaxMSP that I considered last idea sketch, I’ll use Kinect for detecting depth & position in order to realize more intuitive interaction.

I thought this way will be better to make audiences figure out to interact with my work as well as to deliver my intention - make bad words shut up and kick out.

-

Control

- Processor : Processing(image/video), MaxMSP(sound)

- Controller : Kinect(sensing depth and position)

-

Task

- On the screen there is a big mouth with large sound, if there's no people nearby.

- When someone is approaching to the screen, both the size and the volume of mouth are smaller.

- Also, people can drive the mouth out of the screen, chasing it left or right side.

-

To do list

- Making video interaction with Kinect using Processing - now I'm on it

- Making sound interaction with MaxMSP

- Connecting sound with video and Kinect

- Projection setting

19.11.2017

Modification

I’m trying to use MaxMSP for the whole process -video, audio and Kinect input, instead of Processing.

-

Tools for Kinect with MaxMSP

- Synapse : I failed to open it on my MacOSX, so cannot use it.

- jit.freenect.grab : what I'm using for it now. I have to set 1) the proper depth value, 2) video moving according to the x/y coordinate. (but is it possible with max?)